Deploy First, Engineer Later: The AI Risk We Can’t Afford

- Raimund Laqua

- Dec 2, 2025

- 3 min read

The sequence matters: proper engineering design must occur before deployment, not afterwards.

by Raimund Laqua, PMP, P.Eng

As a professional engineer with over three decades of experience in highly regulated industries, I firmly believe we can and should embrace AI technology. However, the current approach to deployment poses a risk we simply cannot afford.

Across industries, I’m observing a troubling pattern: organizations are bypassing the engineering design phase and directly jumping from AI research and prototyping to production deployment.

This “Deploy First, Engineer Later” approach or as some call, "Fail First, Fail Fast": treats AI systems like software products rather than engineered systems that require professional design discipline.

Engineering design goes beyond validation and testing after deployment; it’s a disciplined practice of designing systems for safety, reliability, and trust from the outset.

When we want these qualities in AI systems and the internal controls that use them, we must engineer them in from the beginning, not retrofit them later.

Here’s the typical sequence organizations follow:

Research and prototype development

Direct deployment to production systems

Hope to retrofit safety, security, quality, and reliability later

What should happen instead:

Research and controlled experimentation

Engineering design for safety, reliability, and trust requirements

Deployment of properly engineered systems

AI research and controlled experimentation have their place in laboratories where trained professionals can systematically study impacts and develop knowledge for practice.

However, we’re witnessing live experimentation in critical business and infrastructure systems, where both businesses and the public bear the consequences when systems fail due to inadequate engineering.

When companies deploy AI without proper engineering design, they’re building systems that don’t account for the most important qualities: safety, security, quality, reliability, and trust. These aren’t features that can be added later; they must be built into the system architecture from the start.

Consider the systems we rely on: medical devices, healthcare, power generation and distribution, financial systems, transportation networks, and many others. These systems require engineering design that considers failure modes, safety margins, reliability requirements, and trustworthiness criteria before deployment. However, AI is being integrated into these systems without this essential engineering work.

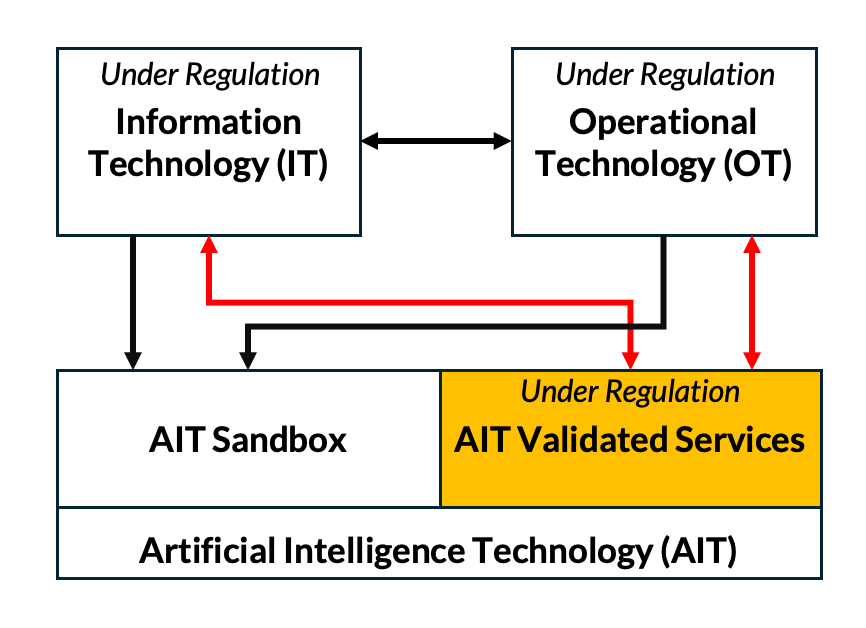

This creates what I call an “operational compliance gap.” Organizations have governance policies and risk management statements, but these don’t translate into the engineering design work needed to build or procure inherently safe and reliable systems.

Without proper engineering design, governance policies become meaningless abstractions. They give the appearance of protection, but without the operational capabilities to ensure that what matters most is protected.

The risk goes beyond individual organizations. We currently lack enough licensed professional engineers with AI expertise to provide the engineering design discipline critical systems need. Without professional accountability structures, software developers are making engineering design decisions about safety and mission-critical systems without the professional obligations that engineering practice demands.

Professional engineering licensing ensures accountability for proper design practice. Engineers become professionally obligated to design systems that meet safety, reliability, and trust requirements. This creates the discipline needed to counteract the “deploy first, engineer later” approach that’s currently dominating AI adoption.

The consequences of deploying unengineered AI systems aren’t abstract future concerns; they’re immediate risks to operational integrity, business continuity, and public safety. These risks are simply too great for businesses and society to ignore, especially as they try to retrofit engineering discipline into systems never intended for safety or reliability.

Engineering design can’t be an afterthought. The sequence matters: proper engineering design must occur before deployment, not afterwards.

Deploying systems first and then engineering them is a risk we simply can’t afford.